I don’t want to be the kind of science blogger who constantly complains about science fiction, but sometimes I can’t help myself.

When I blogged about zero-point energy a few weeks back, there was a particular book that set me off. Ian McDonald’s River of Gods depicts the interactions of human and AI agents in a fragmented 2047 India. One subplot deals with a power company pursuing zero-point energy, using an imagined completion of M theory called M* theory. This post contains spoilers for that subplot.

What frustrated me about River of Gods is that the physics in it almost makes sense. It isn’t just an excuse for magic, or a standard set of tropes. Even the name “M* theory” is extremely plausible, the sort of term that could get used for technical reasons in a few papers and get accidentally stuck as the name of our fundamental theory of nature. But because so much of the presentation makes sense, it’s actively frustrating when it doesn’t.

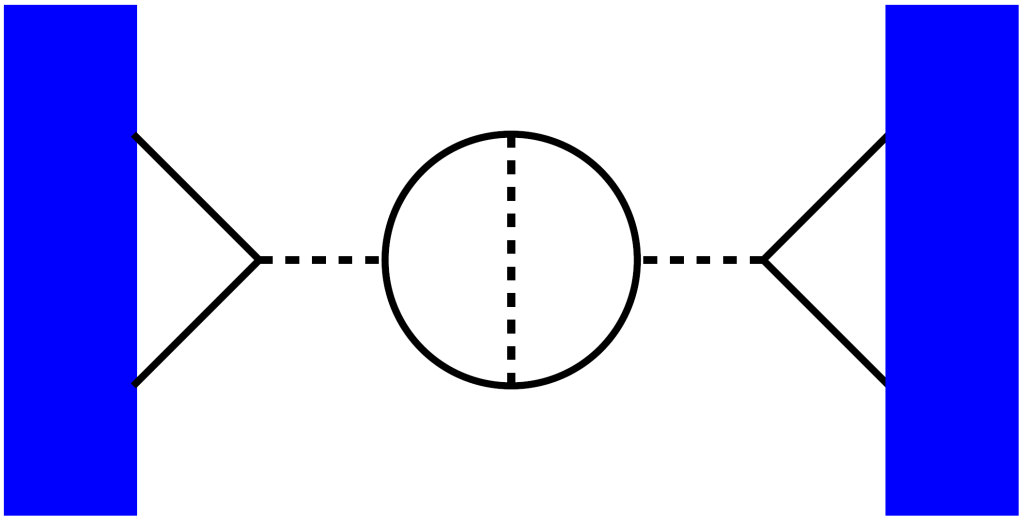

The problem is the role the landscape of M* theory plays in the story. The string theory (or M theory) landscape is the space of all consistent vacua, a list of every consistent “default” state the world could have. In the story, one of the AIs is trying to make a portal to somewhere else in the landscape, a world of pure code where AIs can live in peace without competing with humans.

The problem is that the landscape is not actually a real place in string theory. It’s a metaphorical mathematical space, a list organized by some handy coordinates. The other vacua, the other “default states”, aren’t places you can travel to, there just other ways the world could have been.

Ok, but what about the multiverse?

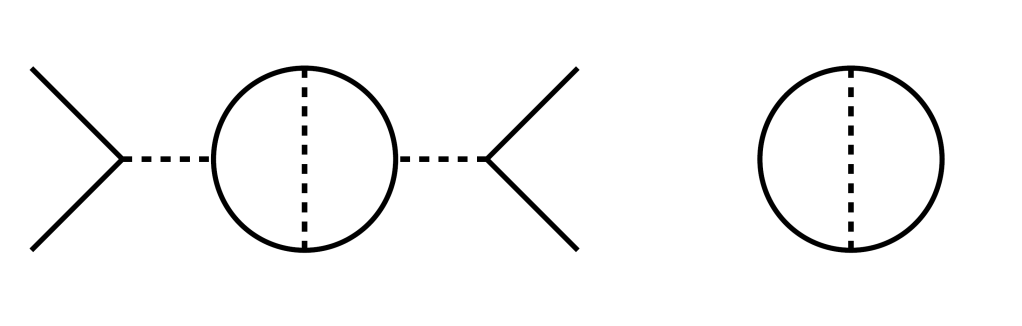

There are physicists out there who like to talk about multiple worlds. Some think they’re hypothetical, others argue they must exist. Sometimes they’ll talk about the string theory landscape. But to get a multiverse out of the string theory landscape, you need something else as well.

Two options for that “something else” exist. One is called eternal inflation, the other is the many-worlds interpretation of quantum mechanics. And neither lets you travel around the multiverse.

In eternal inflation, the universe is expanding faster and faster. It’s expanding so fast that, in most places, there isn’t enough time for anything complicated to form. Occasionally, though, due to quantum randomness, a small part of the universe expands a bit more slowly: slow enough for stars, planets, and maybe life. Each small part like that is its own little “Big Bang”, potentially with a different “default” state, a different vacuum from the string landscape. If eternal inflation is true then you can get multiple worlds, but they’re very far apart, and getting farther every second: not easy to visit.

The many-worlds interpretation is a way to think about quantum mechanics. One way to think about quantum mechanics is to say that quantum states are undetermined until you measure them: a particle could be spinning left or right, Schrödinger’s cat could be alive or dead, and only when measured is their state certain. The many-worlds interpretation offers a different way: by doing away with measurement, it instead keeps the universe in the initial “undetermined” state. The universe only looks determined to us because of our place in it: our states become entangled with those of particles and cats, so that our experiences only correspond to one determined outcome, the “cat alive branch” or the “cat dead branch”. Combine this with the string landscape, and our universe might have split into different “branches” for each possible stable state, each possible vacuum. But you can’t travel to those places, your experiences are still “just on one branch”. If they weren’t, many-worlds wouldn’t be an interpretation, it would just be obviously wrong.

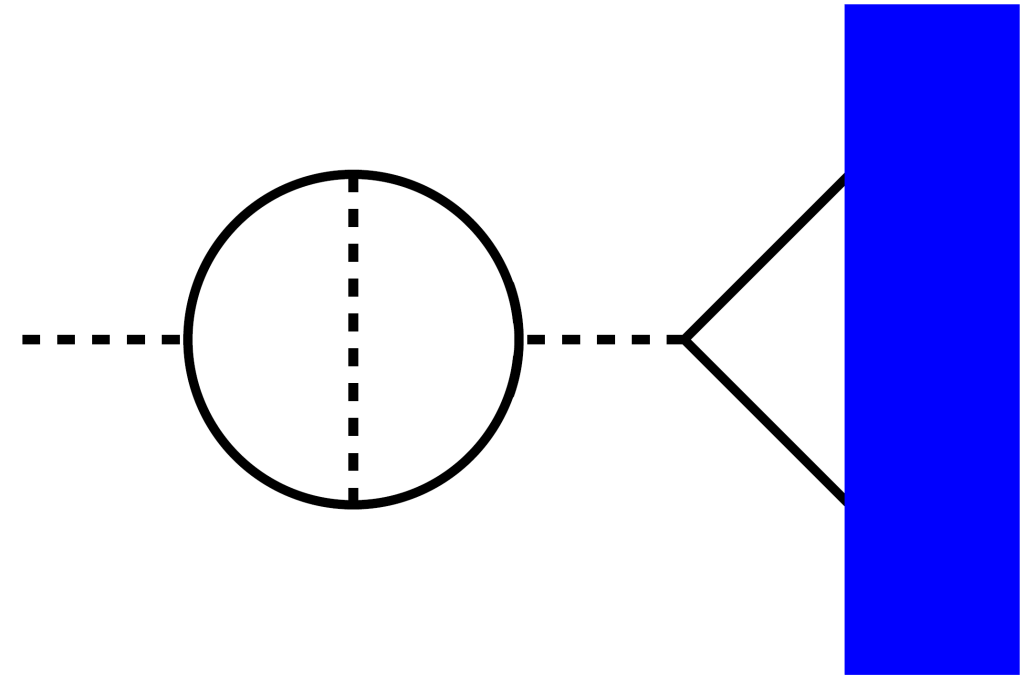

In River of Gods, the AI manipulates a power company into using a particle accelerator to make a bubble of a different vacuum in the landscape. Surprisingly, that isn’t impossible. Making a bubble like that is a bit like what the Large Hadron Collider does, but on a much larger scale. When the Large Hadron Collider detected a Higgs boson, it had created a small ripple in the Higgs field, a small deviation from its default state. One could imagine a bigger ripple doing more: with vastly more energy, maybe you could force the Higgs all the way to a different default, a new vacuum in its landscape of possibilities.

Doing that doesn’t create a portal to another world, though. It destroys our world.

That bubble of a different vacuum isn’t another branch of quantum many-worlds, and it isn’t a far-off big bang from eternal inflation. It’s a part of our own universe, one with a different “default state” where the particles we’re made of can’t exist. And typically, a bubble like that spreads at the speed of light.

In the story, they have a way to stabilize the bubble, stop it from growing or shrinking. That’s at least vaguely believable. But it means that their “portal to another world” is just a little bubble in the middle of a big expensive device. Maybe the AI can live there happily…until the humans pull the plug.

Or maybe they can’t stabilize it, and the bubble spreads and spreads at the speed of light destroying everything. That would certainly be another way for the AI to live without human interference. It’s a bit less peaceful than advertised, though.