While I do love science, I don’t always love IFL Science. They can be good at drumming up enthusiasm, but they can also be ridiculously gullible. Case in point: last week, IFL Science ran a piece on a recent paper purporting to give evidence for faster-than-light particles.

Faster than light! Sounds cool, right? Here’s why you should be skeptical:

If a science article looks dubious, you should check out the source. In this case, IFL Science links to an article on the preprint server arXiv.

arXiv is a freely accessible website where physicists and mathematicians post their articles. The site has multiple categories, corresponding to different fields. It’s got categories for essentially any type of physics you’d care to include, with the option to cross-list if you think people from multiple areas might find your work interesting.

So which category is this paper in? Particle physics? Astrophysics?

General Physics, actually.

General Physics is arXiv’s catch-all category. Some of it really is general, and can’t be put into any more specific place. But most of it, including this, falls into another category: things arXiv’s moderators think are fishy.

arXiv isn’t a journal. If you follow some basic criteria, it won’t reject your articles. Instead, dubious articles are put into General Physics, to signify that they don’t seem to belong with the other scholarship in the established categories. General Physics is a grab-bag of weird ideas and crackpot theories, a mix of fringe physicists and overenthusiastic amateurs. There probably are legitimate papers in there too…but for every paper in there, you can guarantee that some experienced researcher found it suspicious enough to send into exile.

Even if you don’t trust the moderators of arXiv, there are other reasons to be wary of faster-than-light particles.

According to Einstein’s theory of relativity, massless particles travel at the speed of light, while massive particles always travel slower. To travel faster than the speed of light, you need to have a very unusual situation: a particle whose mass is an imaginary number.

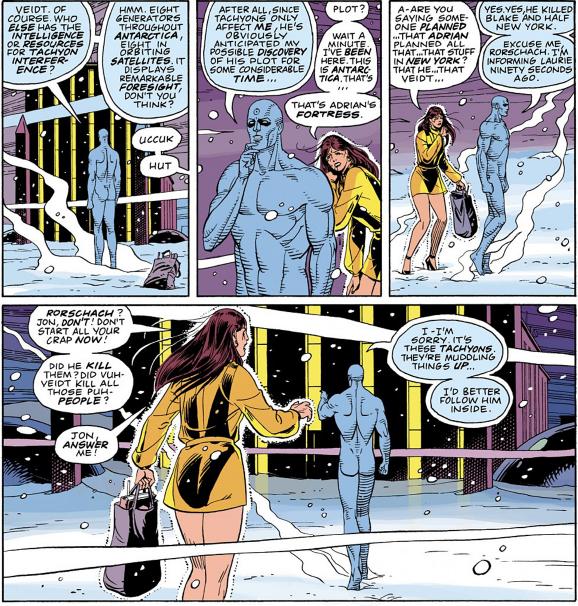

Particles like that are called tachyons, and they’re a staple of science fiction. While there was a time when they were a serious subject of physics speculation, nowadays the general view is that tachyons are a sign we’re making bad assumptions.

Why is that? It has to do with the nature of mass.

In quantum field theory, what we observe as particles arise as ripples in quantum fields, extending across space and time. The harder it is to make the field ripple, the higher the particle’s mass.

A tachyon has imaginary mass. This means that it isn’t hard to make the field ripple at all. In fact, exactly the opposite happens: it’s easier to ripple than to stay still! Any ripple, no matter how small, will keep growing until it’s not just a ripple, but a new default state for the field. Only when it becomes hard to change again will the changes stop. If it’s hard to change, though, then the particle has a normal, non-imaginary mass, and is no longer a tachyon!

Thus, the modern understanding is that if a theory has tachyons in it, it’s because we’re assuming that one of the quantum fields has the wrong default state. Switching to the correct default gets rid of the tachyons.

There are deeper problems with the idea proposed in this paper. Normally, the only types of fields that can have tachyons are scalars, fields that can be defined by a single number at each point, sort of like a temperature. The particles this article is describing aren’t scalars, though, they’re fermions, the type of particle that includes everyday matter like electrons. Those sorts of particles can’t be tachyons at all without breaking some fairly important laws of physics. (For a technical explanation of why this is, Lubos Motl’s reply to the post here is pretty good.)

Of course, this paper’s author knows all this. He’s well aware that he’s suggesting bending some fairly fundamental laws, and he seems to think there’s room for it. But that, really, is the issue here: there’s room for it. The paper isn’t, as IFL Science seems to believe, six pieces of evidence for faster-than-light particles. It’s six measurements that, if you twist them around and squint and pick exactly the right model, have room for faster-than-light particles. And that’s…probably not worth an article.