A couple weeks back, there was a bit of a scuffle between Matt Strassler and Peter Woit on the subject of predictions in string theory (or more properly, the question of whether any predictions can be made at all). As a result, Strassler has begun a series on the subject of quantum field theory, string theory, and predictions.

Strassler hasn’t gotten to the topic of string vacua yet, but he’s probably going to cover the subject in a future post. While his take on the subject is likely to be more expansive and precise than mine, I think my perspective on the problem might still be of interest.

Let’s start with the basics: one of the problems often cited with string theory is the landscape problem, the idea that string theory has a metaphorical landscape of around 10^500 vacua.

What are vacua?

Vacua is the plural of vacuum.

Ok, and?

A vacuum is empty space.

That’s what you thought, right? That’s the normal meaning of vacuum. But if a vacuum is empty, how can there be more than one of them, let alone 10^500?

“Empty” is subjective.

Now we’re getting somewhere. The problem with defining a concept like “empty space” in string theory or field theory is that it’s unclear what precisely it should be empty of. Naively, such a space should be empty of “stuff”, or “matter”, but our naive notions of “matter” don’t apply to field theory or string theory. In fact, there is plenty of “stuff” that can be present in “empty” space.

Think about two pieces of construction paper. One is white, the other is yellow. Which is empty? Neither has anything drawn on it, so while one has a color and the other does not, both are empty.

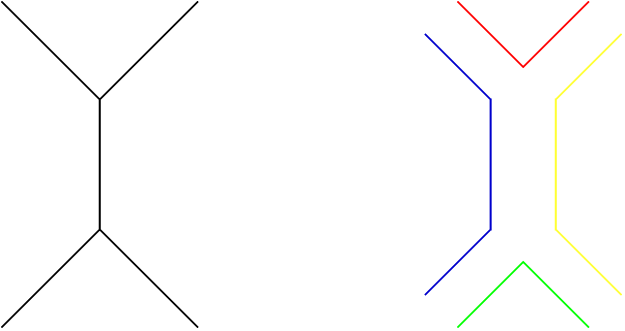

“Empty space” doesn’t come in multiple colors like construction paper, but there are equivalent parameters that can vary. In quantum field theory, one option is for scalar fields to take different values. In string theory, different dimensions can be curled up in different ways (as an aside, when string theory leads to a quantum field theory often these different curling-up shapes correspond to different values for scalar fields, so the two ideas are related).

So if space can have “stuff” in it and still count as empty, are there any limits on what can be in it?

As it turns out, there is a quite straightforward limit. But to explain it, I need to talk a bit about why physicists care about vacua in the first place.

Why do physicists care about vacua?

In physics, there is a standard modus operandi for solving problems. If you’ve taken even a high school physics course, you’ve probably encountered it in some form. It’s not the only way to solve problems, but it’s one of the easiest. The idea, broadly, is the following:

First get the initial conditions, and then use the laws of physics to see what happens next.

In high school physics, this is how almost every problem works: your teacher tells you what the situation is, and you use what you know to figure out what happens next.

In quantum field theory, things are a bit more subtle, but there is a strong resemblance. You start with a default state, and then find the perturbations, or small changes, around that state.

In high school, your teacher told you what the initial conditions were. In quantum field theory, you need another source for the “default state”. Sometimes, you get that from observations of the real world. Sometimes, though, you want to make a prediction that goes beyond what your observations tell you. In that case, one trick often proves useful:

To find the default state, find which state is stable.

If your system starts out in a state that is unstable, it will change. It will keep changing until eventually it changes into a stable state, where it will stop changing. So if you’re looking for a default state, that state should be one in which the system is stable, where it won’t change.

(I’m oversimplifying things a bit here to make them easier to understand. In particular, I’m making it sound like these things change over time, which is a bit of a tricky subject when talking about different “default” states for the whole of space and time. There’s also a cool story connected to this about why tachyons don’t exist, which I’d love to go into for another post.)

Since we know that the “default” state has to be stable, if there is only one stable state, we’ve found the default!

Because of this, we can lay down a somewhat better definition:

A vacuum is a stable state.

There’s more to the definition than this, but this should be enough to give you the feel for what’s going on. If we want to know the “default” state of the world, the state which everything else is just a small perturbation on top of, we need to find a vacuum. If there is only one plausible vacuum, then our work is done.

When there are many plausible vacua, though, we have a problem. When there are 10^500 vacua, we have a huge problem.

That, in essence, is why many people despair of string theory ever making any testable predictions. String theory has around 10^500 plausible vacua (for a given, technical, meaning of plausible).

It’s important to remember a few things here.

First, the reason we care about vacuum states is because we want a “default” to make predictions around. That is, in a sense, a technical problem, in that it is an artifact of our method. It’s a result of the fact that we are choosing a default state and perturbing around it, rather than proving things that don’t depend on our choice of default state. That said, this isn’t as useful an insight as it might appear, and as it turns out there is generally very little that can be predicted without choosing a vacuum.

Second, the reason that the large number of vacua is a problem is that if there was only one vacuum, we would know which state was the default state for our world. Instead, we need some other method to pick, out of the many possible vacua, which one to use to make predictions. That is, in a sense, a philosophical problem, in that it asks what seems ostensibly to be a philosophical question: what is the basic, default state of the universe?

This happens to be a slightly more useful insight than the first one, and it leads to a number of different approaches. The most intuitive solution is to just shrug and say that we will see which vacuum we’re in by observing the world around us. That’s a little glib, since many different vacua could lead to very similar observations. A better tactic might be to try to make predictions on general grounds by trying to see what the world we can already observe implies about which vacua are possible, but this is also quite controversial. And there are some people who try another approach, attempting to pick a vacuum not based on observations, but rather on statistics, choosing a vacuum that appears to be “typical” in some sense, or that satisfies anthropic constraints. All of these, again, are controversial, and I make no commentary here about which approaches are viable and which aren’t. It’s a complicated situation and there are a fair number of people working on it. Perhaps, in the end, string theory will be ruled un-testable. Perhaps the relevant solution is right under peoples’ noses. We just don’t know.