Sabine Hossenfelder has a blog post this week chastising particle physicists and cosmologists for following “upside-down Popper”, or assuming a theory is worth working on merely because it’s falsifiable. She describes her colleagues churning out one hypothesis after another, each tweaking an old idea just enough to make it falsifiable in the next experiment, without caring whether the hypothesis is actually likely to be true.

Sabine is much more of an expert in this area of physics (phenomenology) than I am, and I don’t presume to tell her she’s wrong about that community. But the problem she’s describing is part of something bigger, something that affects my part of physics as well.

There’s a core question we’d all like to answer: what should physicists work on? What criteria should guide us?

Falsifiability isn’t the whole story. The next obvious criterion is a sense of simplicity, of Occam’s Razor or mathematical elegance. Sabine has argued against the latter, which prompted a friend of mine to comment that between rejecting falsifiability and elegance, Sabine must want us to stop doing high-energy physics at all!

That’s more than a little unfair, though. I think Sabine has a reasonably clear criterion in mind. It’s the same criterion that most critics of the physics mainstream care about. It’s even the same criterion being used by the “other side”, the sort of people who criticize anything that’s not string/SUSY/inflation.

The criterion is quite a simple one: physics research should be productive. Anything we publish, anything we work on, should bring us closer to understanding the real world.

And before you object that this criterion is obvious, that it’s subjective, that it ignores the very real disagreements between the Sabines and the Luboses of the world…before any of that, please let me finish.

We can’t achieve this criterion. And we shouldn’t.

We can’t demand that all physics be productive without breaking a fundamental bargain, one we made when we accepted that science could be a career.

The Hunchback of Notre Science

It wasn’t always this way. Up until the nineteenth century, “scientist” was a hobby, not a job.

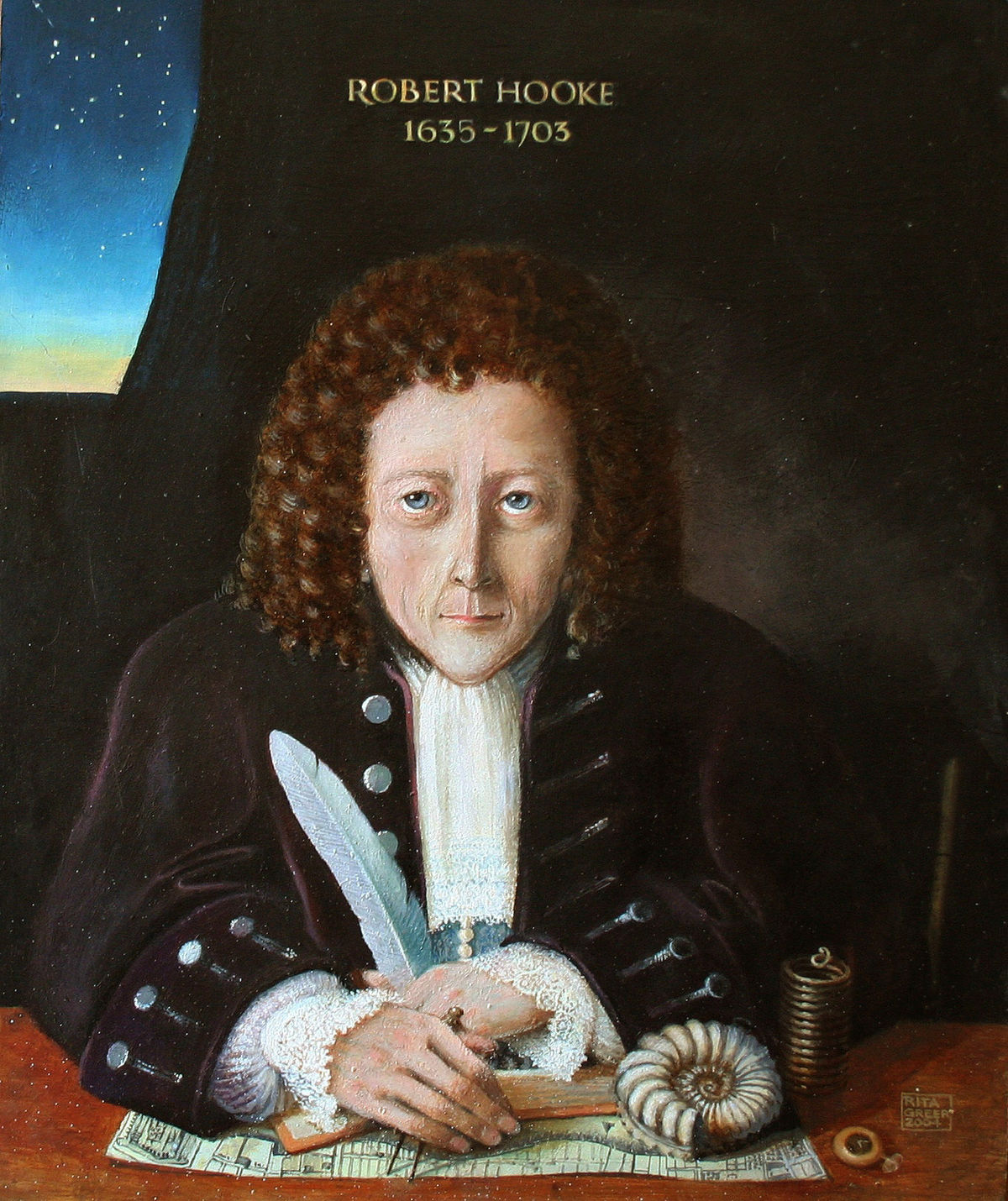

After Newton published his theory of gravity, he was famously accused by Robert Hooke of stealing the idea. There’s some controversy about this, but historians agree on a few points: that Hooke did write a letter to Newton suggesting a  force law, and that Hooke, unlike Newton, never really worked out the law’s full consequences.

force law, and that Hooke, unlike Newton, never really worked out the law’s full consequences.

Why not? In part, because Hooke, unlike Newton, had a job.

Hooke was arguably the first person for whom science was a full-time source of income. As curator of experiments for the Royal Society, it was his responsibility to set up demonstrations for each Royal Society meeting. Later, he also handled correspondence for the Royal Society Journal. These responsibilities took up much of his time, and as a result, even if he was capable of following up on the consequences of  he wouldn’t have had time to focus on it. That kind of calculation wasn’t what he was being paid for.

he wouldn’t have had time to focus on it. That kind of calculation wasn’t what he was being paid for.

We’re better off than Hooke today. We still have our responsibilities, to journals and teaching and the like, at various stages of our careers. But in the centuries since Hooke expectations have changed, and real original research is no longer something we have to fit in our spare time. It’s now a central expectation of the job.

When scientific research became a career, we accepted a kind of bargain. On the positive side, you no longer have to be independently wealthy to contribute to science. More than that, the existence of professional scientists is the bedrock of technological civilization. With enough scientists around, we get modern medicine and the internet and space programs and the LHC, things that wouldn’t be possible in a world of rare wealthy geniuses.

We pay a price for that bargain, though. If science is a steady job, then it has to provide steady work. A scientist has to be able to go in, every day, and do science.

And the problem is, science doesn’t always work like that. There isn’t always something productive to work on. Even when there is, there isn’t always something productive for you to work on.

Sabine blames “upside-down Popper” on the current publish-or-perish environment in physics. If physics careers weren’t so cut-throat and the metrics they are judged by weren’t so flawed, then maybe people would have time to do slow, careful work on deeper topics rather than pumping out minimally falsifiable papers as fast as possible.

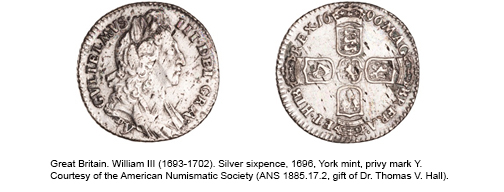

There’s a lot of truth to this, but I think at its core it’s a bit too optimistic. Each of us only has a certain amount of expertise, and sometimes that expertise just isn’t likely to be productive at the moment. Because science is a job, a person in that position can’t just go work at the Royal Mint like Newton did. (The modern-day equivalent would be working for Wall Street, but physicists rarely come back from that.) Instead, they keep doing what they know how to do, slowly branching out, until they’ve either learned something productive or their old topic becomes useful once more. You can think of it as a form of practice, where scientists keep their skills honed until they’re needed.

So if we slow down the rate of publication, if we create metrics for universities that let them hire based on the depth and importance of work and not just number of papers and citations, if we manage all of that then yes we will improve science a great deal. But Lisa Randall still won’t work on Haag’s theorem.

In the end, we’ll still have physicists working on topics that aren’t actually productive.

A physicist lazing about unproductively under an apple tree

So do we have to pay physicists to work on whatever they want, no matter how ridiculous?

No, I’m not saying that. We can’t expect everyone to do productive work all the time, but we can absolutely establish standards to make the work more likely to be productive.

Strange as it may sound, I think our standards for this are already quite good, or at least better than many other fields.

First, there’s falsifiability itself, or specifically our attitude towards it.

Physics’s obsession with falsifiability has one important benefit: it means that when someone proposes a new model of dark matter or inflation that they tweaked to be just beyond the current experiments, they don’t claim to know it’s true. They just claim it hasn’t been falsified yet.

This is quite different from what happens in biology and the social sciences. There, if someone tweaks their study to be just within statistical significance, people typically assume the study demonstrated something real. Doctors base treatments on it, and politicians base policy on it. Upside-down Popper has its flaws, but at least it’s never going to kill anybody, or put anyone in prison.

Admittedly, that’s a pretty low bar. Let’s try to set a higher one.

Moving past falsifiability, what about originality? We have very strong norms against publishing work that someone else has already done.

Ok, you (and probably Sabine) would object, isn’t that easy to get around? Aren’t all these Popper-flippers pretending to be original but really just following the same recipe each time, modifying their theory just enough to stay falsifiable?

To some extent. But if they were really following a recipe, you could beat them easily: just write the recipe down.

Physics progresses best when we can generalize, when we skip from case-by-case to understanding whole swaths of cases at once. Over time, there have been plenty of cases in which people have done that, where a number of fiddly hand-made models have been summarized in one parameter space. Once that happens, the rule of originality kicks in: now, no-one can propose another fiddly model like that again. It’s already covered.

As long as the recipe really is just a recipe, you can do this. You can write up what these people are doing in computer code, release the code, and then that’s that, they have to do something else. The problem is, most of the time it’s not really a recipe. It’s close enough to one that they can rely on it, close enough to one that they can get paper after paper when they need to…but it still requires just enough human involvement, just enough genuine originality, to be worth a paper.

The good news is that the range of “recipes” we can code up increases with time. Some spaces of theories we might never be able to describe in full generality (I’m glad there are people trying to do statistics on the string landscape, but good grief it looks quixotic). Some of the time though, we have a real chance of putting a neat little bow on a subject, labeled “no need to talk about this again”.

This emphasis on originality keeps the field moving. It means that despite our bargain, despite having to tolerate “practice” work as part of full-time physics jobs, we can still nudge people back towards productivity.

One final point: it’s possible you’re completely ok with the idea of physicists spending most of their time “practicing”, but just wish they wouldn’t make such a big deal about it. Maybe you can appreciate that “can I cook up a model where dark matter kills the dinosaurs” is an interesting intellectual exercise, but you don’t think it should be paraded in front of journalists as if it were actually solving a real problem.

In that case, I agree with you, at least up to a point. It is absolutely true that physics has a dysfunctional relationship with the media. We’re too used to describing whatever we’re working on as the most important thing in the universe, and journalists are convinced that’s the only way to get the public to pay attention. This is something we can and should make progress on. An increasing number of journalists are breaking from the trend and focusing not on covering the “next big thing”, but in telling stories about people. We should do all we can to promote those journalists, to spread their work over the hype, to encourage the kind of stories that treat “practice” as interesting puzzles pursued by interesting people, not the solution to the great mysteries of physics. I know that if I ever do anything newsworthy, there are some journalists I’d give the story to before any others.

At the same time, it’s important to understand that some of the dysfunction here isn’t unique to physics, or even to science. Deep down the reason nobody can admit that their physics is “practice” work is the same reason people at job interviews claim to love the company, the same reason college applicants have to tell stirring stories of hardship and couples spend tens of thousands on weddings. We live in a culture in which nothing can ever just be “ok”, in which admitting things are anything other than exceptional is akin to calling them worthless. It’s an arms-race of exaggeration, and it goes far beyond physics.

(I should note that this “culture” may not be as universal as I think it is. If so, it’s possible its presence in physics is due to you guys letting too many of us Americans into the field.)

We made a bargain when we turned science into a career. We bought modernity, but the price we pay is subsidizing some amount of unproductive “practice” work. We can negotiate the terms of our bargain, and we should, tilting the field with incentives to get it closer to the truth. But we’ll never get rid of it entirely, because science is still done by people. And sometimes, despite what we’re willing to admit, people are just “ok”.