If you ever think metaphysics is easy, learn a little quantum field theory.

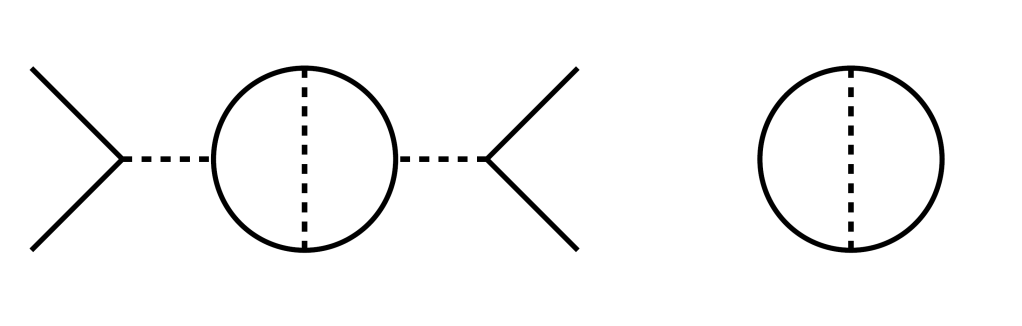

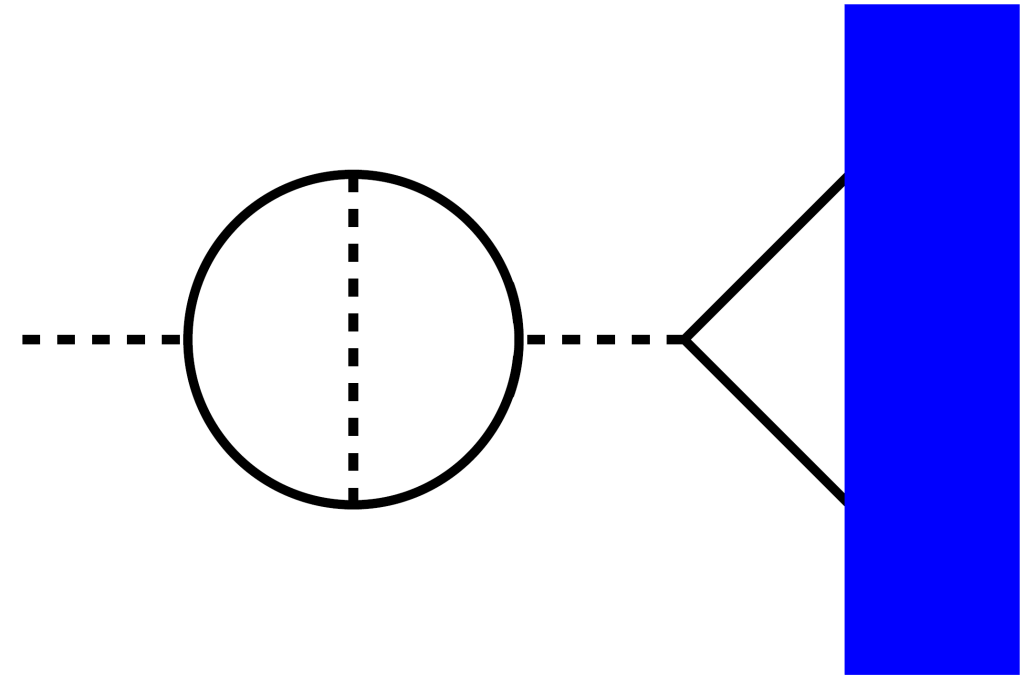

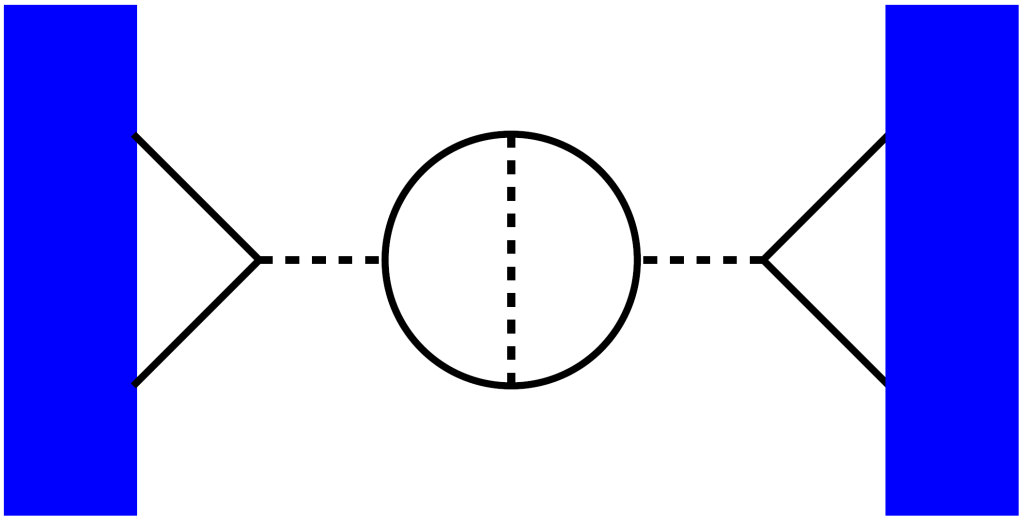

Someone asked me recently about virtual particles. When talking to the public, physicists sometimes explain the behavior of quantum fields with what they call “virtual particles”. They’ll describe forces coming from virtual particles going back and forth, or a bubbling sea of virtual particles and anti-particles popping out of empty space.

The thing is, this is a metaphor. What’s more, it’s a metaphor for an approximation. As physicists, when we draw diagrams with more and more virtual particles, we’re trying to use something we know how to calculate with (particles) to understand something tougher to handle (interacting quantum fields). Virtual particles, at least as you’re probably picturing them, don’t really exist.

I don’t really blame physicists for talking like that, though. Virtual particles are a metaphor, sure, a way to talk about a particular calculation. But so is basically anything we can say about quantum field theory. In quantum field theory, it’s pretty tough to say which things “really exist”.

I’ll start with an example, neutrino oscillation.

You might have heard that there are three types of neutrinos, corresponding to the three “generations” of the Standard Model: electron-neutrinos, muon-neutrinos, and tau-neutrinos. Each is produced in particular kinds of reactions: electron-neutrinos, for example, get produced by beta-plus decay, when a proton turns into a neutron, an anti-electron, and an electron-neutrino.

Leave these neutrinos alone though, and something strange happens. Detect what you expect to be an electron-neutrino, and it might have changed into a muon-neutrino or a tau-neutrino. The neutrino oscillated.

Why does this happen?

One way to explain it is to say that electron-neutrinos, muon-neutrinos, and tau-neutrinos don’t “really exist”. Instead, what really exists are neutrinos with specific masses. These don’t have catchy names, so let’s just call them neutrino-one, neutrino-two, and neutrino-three. What we think of as electron-neutrinos, muon-neutrinos, and tau-neutrinos are each some mix (a quantum superposition) of these “really existing” neutrinos, specifically the mixes that interact nicely with electrons, muons, and tau leptons respectively. When you let them travel, it’s these neutrinos that do the traveling, and due to quantum effects that I’m not explaining here you end up with a different mix than you started with.

This probably seems like a perfectly reasonable explanation. But it shouldn’t. Because if you take one of these mass-neutrinos, and interact with an electron, or a muon, or a tau, then suddenly it behaves like a mix of the old electron-neutrinos, muon-neutrinos, and tau-neutrinos.

That’s because both explanations are trying to chop the world up in a way that can’t be done consistently. There aren’t electron-neutrinos, muon-neutrinos, and tau-neutrinos, and there aren’t neutrino-ones, neutrino-twos, and neutrino-threes. There’s a mathematical object (a vector space) that can look like either.

Whether you’re comfortable with that depends on whether you think of mathematical objects as “things that exist”. If you aren’t, you’re going to have trouble thinking about the quantum world. Maybe you want to take a step back, and say that at least “fields” should exist. But that still won’t do: we can redefine fields, add them together or even use more complicated functions, and still get the same physics. The kinds of things that exist can’t be like this. Instead you end up invoking another kind of mathematical object, equivalence classes.

If you want to be totally rigorous, you have to go a step further. You end up thinking of physics in a very bare-bones way, as the set of all observations you could perform. Instead of describing the world in terms of “these things” or “those things”, the world is a black box, and all you’re doing is finding patterns in that black box.

Is there a way around this? Maybe. But it requires thought, and serious philosophy. It’s not intuitive, it’s not easy, and it doesn’t lend itself well to 3d animations in documentaries. So in practice, whenever anyone tells you about something in physics, you can be pretty sure it’s a metaphor. Nice describable, non-mathematical things typically don’t exist.