How many angels can dance on the head of a pin?

It’s a question famous for its sheer pointlessness. While probably no-one ever had that exact debate, “how many angels fit on a pin” has become a metaphor, first for a host of old theology debates that went nowhere, and later for any academic study that seems like a waste of time. Occasionally, physicists get accused of doing this: typically string theorists, but also people who debate interpretations of quantum mechanics.

Are those accusations fair? Sometimes yes, sometimes no. In order to tell the difference, we should think about what’s wrong, exactly, with counting angels on the head of a pin.

One obvious answer is that knowing the number of angels that fit on a needle’s point is useless. Wikipedia suggests that was the origin of the metaphor in the first place, a pun on “needle’s point” and “needless point”. But this answer is a little too simple, because this would still be a useful debate if angels were real and we could interact with them. “How many angels fit on the head of a pin” is really a question about whether angels take up space, whether two angels can be at the same place at the same time. Asking that question about particles led physicists to bosons and fermions, which among other things led us to invent the laser. If angelology worked, perhaps we would have angel lasers as well.

“If angelology worked” is key here, though. Angelology didn’t work, it didn’t lead to angel-based technology. And while Medieval people couldn’t have known that for certain, maybe they could have guessed. When people accuse academics of “counting angels on the head of a pin”, they’re saying they should be able to guess that their work is destined for uselessness.

How do you guess something like that?

Well, one problem with counting angels is that nobody doing the counting had ever seen an angel. Counting angels on the head of a pin implies debating something you can’t test or observe. That can steer you off-course pretty easily, into conclusions that are either useless or just plain wrong.

This can’t be the whole of the problem though, because of mathematics. We rarely accuse mathematicians of counting angels on the head of a pin, but the whole point of math is to proceed by pure logic, without an experiment in sight. Mathematical conclusions can sometimes be useless (though we can never be sure, some ideas are just ahead of their time), but we don’t expect them to be wrong.

The key difference is that mathematics has clear rules. When two mathematicians disagree, they can look at the details of their arguments, make sure every definition is as clear as possible, and discover which one made a mistake. Working this way, what they build is reliable. Even if it isn’t useful yet, the result is still true, and so may well be useful later.

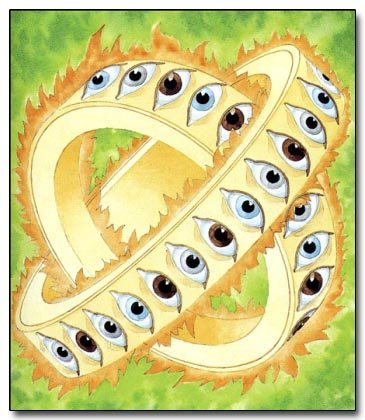

In contrast, when you imagine Medieval monks debating angels, you probably don’t imagine them with clear rules. They might quote contradictory bible passages, argue everyday meanings of words, and win based more on who was poetic and authoritative than who really won the argument. Picturing a debate over how many angels can fit on the head of a pin, it seems more like Calvinball than like mathematics.

This then, is the heart of the accusation. Saying someone is just debating how many angels can dance on a pin isn’t merely saying they’re debating the invisible. It’s saying they’re debating in a way that won’t go anywhere, a debate without solid basis or reliable conclusions. It’s saying, not just that the debate is useless now, but that it will likely always be useless.

As an outsider, you can’t just dismiss a field because it can’t do experiments. What you can and should do, is dismiss a field that can’t produce reliable knowledge. This can be hard to judge, but a key sign is to look for these kinds of Calvinball-style debates. Do people in the field seem to argue the same things with each other, over and over? Or do they make progress and open up new questions? Do the people talking seem to be just the famous ones? Or are there cases of young and unknown researchers who happen upon something important enough to make an impact? Do people just list prior work in order to state their counter-arguments? Or do they build on it, finding consequences of others’ trusted conclusions?

A few corners of string theory do have this Calvinball feel, as do a few of the debates about the fundamentals of quantum mechanics. But if you look past the headlines and blogs, most of each of these fields seems more reliable. Rather than interminable back-and-forth about angels and pinheads, these fields are quietly accumulating results that, one way or another, will give people something to build on.

Pingback: Are string theorists and quantum physicists counting angels on pins? – The nth Root

I’m not sure if this topic is right, but I’d like ask you about a claim by Nima Arkani Hamed, which seems to be endorsed by you and also by Lubos Motl, that the spacetime is doomed.

This claim is based on two arguments. I’ll quote them from here:

https://pswscience.org/meeting/the-doom-of-spacetime/

Argument 1:

“To see what exactly is going on at arbitrarily small distances, we must use high energies. In a world without gravity, there is, in principle, no limit to scaling the size of the detector to see what is going on at increasingly small distances. But we live with gravity, and where there is too much mass, we get a black hole that traps light – meaning that if we build too big a detector, we will create a black hole that will prevent us from seeing what happens at the smallest distances. Thus, gravity limits our ability to measure spacetime, which means our current understanding of spacetime is merely approximate and not fully accurate.”

Argument 2:

“To measure such quantum observables, our precision improves by how many measurements we take. But, to take the infinitely many measurements required to reach almost exact precision, would require an infinitely large measuring apparatus, which is again limited by gravity. This limitation means quantum mechanics is also an approximation.”

When I look at the above arguments, I see no issue with spacetime itself. Yes, we cannot make infinitely accurate measurements and we cannot observe directly objects infinitely small. But how you get from the inability to measure X to the conclusion that X does not exist?

Even more, if we assume, for the sake of the argument, that one can indeed show a contradiction between spacetime and GR + QM, the conclusion is that at least one theory (GR or QM) must be wrong (since spacetime enters both). If this is the case, why should we trust the very predictions on which those arguments are based?

LikeLike

For the your first question, from a practical perspective there isn’t a huge difference between something that has no measurable consequences and something that doesn’t exist. One can insist that something completely unmeasurable exists anyway, but unless you have quite clear rules about which such things you are considering you risk the kind of “Calvinball” debates I mention in the post. More usefully, something being unmeasurable has in the past been a pretty good hint that physicists ought to work around it in some way: doing so tends to lead to more powerful mathematics and new physical discoveries. That’s the kind of hint that Nima is going off of here: if gravity makes measuring on infinitely small scales impossible, then that suggests there is a better way to do physics that avoids those infinitely small scales.

For your second question, on some level that’s kind of the point. An issue with spacetime is an issue with either GR, QM, or both, and a suggestion that our naive extension of those theories is wrong and something should amend them. “Spacetime is doomed” is a way to summarize that point.

LikeLike

“For the your first question, from a practical perspective there isn’t a huge difference between something that has no measurable consequences and something that doesn’t exist.”

So, it’s just a case of Occam’s razor. The theories we have are just fine (at least in regard to the arguments discussed here), yet Nima wants to replace them with some other, more parsimonious ones. Like getting rid of field theories (as fields cannot be measured at a location where are no field sources) and going back to Newton’s forces acting directly between particles?

“something being unmeasurable has in the past been a pretty good hint that physicists ought to work around it in some way: doing so tends to lead to more powerful mathematics and new physical discoveries.”

Can you give some examples? Usually there is some sort of contradiction (experimental or theoretical) that points to better theories. One example could be Lorentz ether theory/special relativity, but it is questionable to what degree replacing the former with the later led to discoveries.

Another thing that bothers me is this: it is in fact possible to measure something with unlimited accuracy even in the absence of gravity/black holes? I have no idea how one could do that, regardless of the theory. How would you measure exactly the position of an electron (assumed to be a classical particle) in classical electromagnetism? Or the position of a mass in GR? Or the position of an electron in QED? In all situations the exact state of the instrument is unknown and unknowable (since if you try to measure the instrument itself you need another instrument, itself in an unknown state, and so on).

LikeLike

For the first, you’ve got the right idea though the wrong solution. Newtonian theories with particles have the same kind of problem, you can still zoom in as far as you’d like. Instead, the idea would be for some theory to have a mathematical structure that naturally “cuts out” smaller scales. String theory is an example of such a theory, and there are various ways it does this: for example, T duality means that a sufficiently small extra dimension is equivalent to a larger extra dimension, so there’s sort of a “minimum relevant size” an extra dimension can have. In general the idea is not to have a hard cut-off scale, but to have something smooth that nonetheless prevents one from looking too small.

In general, I see progress in science as coming from a combination of experimental mysteries and theoretical insights, where the latter often take the form “what if x didn’t really matter?” Special relativity is the typical example. It’s not that the theoretical concerns are decisive on their own (as you say you can plug in some Lorentz ether if you really want to), rather, it’s that thinking in terms of rejecting ether helps you discover special relativity in the first place, and helps you reason about it later (for example, the easiest way to think about things like the barn door paradox is to focus on what one person can measure). General relativity is a similar story, with choices of coordinates being one of the key un-measurable things that gets ignored. One can tell a similar story about quantum mechanics and throwing out the question “what is a thing before you measure it”, though that’s more controversial. I’d argue that getting comfortable with field theory after Maxwell was an example of this, as scientists got used to not having to propose complicated systems of gears to describe fields it made them more productive. You can add gauge theory to this list too, though for that one there wasn’t really ever a point where people thought the gauge was “real” in the same way.

The question is not about measuring any given thing with literally infinite accuracy, but merely with unbounded accuracy. That is, without taking gravity into account, you can always measure something better. You can always build a better instrument, whose properties you’ve measured better, and get closer to an accurate result. It gets harder and harder, but there’s no “in principle” point you are forced to stop. Gravity cuts this off, it says that at some point your chain of apparatuses measuring apparatuses is not only expensive, it’s impossible, it’s going to collapse to a black hole and you won’t be able to measure what’s inside.

LikeLike

(Also, adding to my list of “ignoring unmeasurable things helps”, a lot of my and Nima’s motivation likely comes from our own field. Scattering amplitudes research has made a lot of progress by trying to represent everything we can in terms of only measurable particles, and to try to build up the answers to measurements without making any assumptions of what happens in-between.)

LikeLike

Thanks for your elaborate answer!

“You can always build a better instrument, whose properties you’ve measured better, and get closer to an accurate result. It gets harder and harder, but there’s no “in principle” point you are forced to stop.”

That may be true but I have not seen any clear example of how to do it. In order to measure a particle’s position you need to bump it into something. That something can be another particle or some large object, like a cloud chamber or a fluorescent screen, or a narrow slit. Those objects in turn are built out of many particles. Once you reach atomic-level resolution how do you improve on it? Nima says that you should repeat the measurement many times, but how? Each measurement perturbs the particle’s position so, making many measurements and averaging them does not seem to work.

LikeLike

So I think part of the confusion here comes from mixing together what you described as “Argument 1” and “Argument 2”, and part comes from the summary on that page being not quite what Nima said in the talk.

“Argument 1” is about probing smaller and smaller scales with higher and higher energies. That doesn’t require that you measure your detector perfectly, as long as it’s a big enough detector. It just requires that your “lever arm” is big enough. LHC experiments already probe sub-atomic scales, and they do it because after a collision, the outgoing particles spread out to get to your detector, so the detector pixels don’t need to be nearly as small as the details of the collision they’re measuring, they just need to be far enough away that they can detect a small angle between the two paths.

“Argument 2” is about the limitations on quantum measurements based on being able to treat your apparatus as classical. This wasn’t made super-clear by the summary on that page, so you may want to listen to that part of the talk, it’s around the 30 minute mark. Basically, if you want to measure something you need to be able to treat your apparatus as classical, which means you’ve got a limit on your precision based on the size of quantum effects in your apparatus. The bigger your apparatus is the smaller it’s de Broglie wavelength and the smaller the quantum effects that meaningfully change it are. So if you want to measure a quantum phenomenon to some specific accuracy you need a larger measurement device to do it. It’s not really about repeated measurements, just about coupling your quantum system to a large, reliable classical one.

LikeLike

I’ve listened to Nima’s speech again and I think I agree with him. The concept of spacetime is indeed incompatible with a fundamentally probabilistic view of physics, but not because the arguments he puts forward, but because EPR. EPR proves that the only way to have a local theory is to “complete” QM with deterministic hidden variables. Since Nima does not seem to like hidden variables (do you know his opinion about EPR? – I could not find anything about it) he needs to accept non-locality and, as a consequence, reject the space-time structure. So, he is right.

That being said, I still find his arguments unconvincing. I accept that it is more likely than not that a physical entity which cannot be measured under any circumstances is not real. So, he is right to suspect that, if unlimitedly precise measurements are not possible, the unlimited detail might not be there. I also agree that, in order to compensate for the probabilistic nature of QM, one needs big instruments so that they approximate a classical, perfectly reliable, deterministic machine. OK, so what stops me building a galaxy-sized instrument? Nothing, in principle. So, I can see no problem here. Yet, Nima introduces a supplementary condition. The instrument should fit inside a room. I find this condition arbitrary. Why should the instrument be small in size?

But let’s go on! For some reason, Nima wants an infinitely dense instrument. OK. That would create a black hole, I’m OK with that. You cannot see inside the black hole from outside its horizon, true. But why not go inside the horizon? This is a second arbitrary condition. Presumably, if the black hole is big enough you can do that, and you can continue looking at your instrument there. So, I still see no problem.

I don’t know if there is some knowledge regarding to the state of matter inside a black hole. In the context of the string-theoretical calculation of BH entropy I remember there were some branes inside. This subject is way beyond me, but let’s call those branes or whatever it’s there BH stuff. Is there a limit of its density? If not, you might build an instrument out of BH stuff and continue improving the measurement precision. If there is a limit to that density, the instrument will still need to be larger than the room, even if smaller than the Schwarzschild radius. But in this case, you cannot blame space-time/gravity anymore, but the behavior of the BH stuff, it just cannot be compressed beyond a certain limit.

According to the other argument, distances smaller than Planck limit (10^-33) cannot exist because a particle with a de Broglie wavelength smaller than that creates a BH. Right, but what about performing a distance measurement with a precision higher than 10^-33? I guess you could measure the distance between the mass centers of two black-holes better than that. So, a distance smaller than Planck length does have observational consequences after all, right?

LikeLike

IME Nima is very much in the “shut up and calculate” school of quantum interpretations. He’s also a quantum field theorist, so he thinks of locality in terms of the possibility of superluminal signaling, not ontology. So no, he doesn’t have the same interpretation of EPR that you do.

I’m not sure at the moment why you can’t have an infinitely large measuring device with constant density and avoid that part of the argument. Most of the points Nima’s making here are “popularization versions” of arguments I’ve seen the technical form of elsewhere, but this one I haven’t, so I don’t know the details of how it’s supposed to work. The challenge might be in getting all of the information about the measurement into one place where it can be used, but that’s just me speculating.

When talking about black holes, we should distinguish our starting point of naively combining QM and GR with candidate quantum gravity theories. In normal GR, just going past the event horizon with your measuring device doesn’t really help you, if the device collapsed first its light will never reach you no matter how deep you get. String theory may well give you a way around it, but I think Nima would argue (and I would agree) that that’s because string theory in some sense gets rid of spacetime: when your metric is just the lowest-lying excitation of a bunch of closed strings it isn’t really “spacetime” in the normal sense. Nima tends to be an advocate of string theory, and I think his expectation is that the “spacetime is doomed” perspective will yield insights that among other things will help people understand string theory better.

By the way, trying to perform a distance measurement with a precision higher than the Planck limit would still result in a black hole, because you’d need an object with a sufficiently small de Broglie wavelength to probe that difference, just like a microscope will only give you information with a precision of the wavelength of the light you use. You can’t measure the distance between the centers of two black holes to better than Planck length precision.

LikeLike

I found this paper by Nima:

https://direct.mit.edu/daed/article/141/3/53/27043/The-Future-of-Fundamental-Physics

He explains a little bit his view of QM:

“Quantum mechanics represented a more radical departure from classical physics, involving a completely new conceptual framework, both physically and mathematically. We learned that nature is not deterministic, and only probabilities can be predicted.”

OK, so he thinks that QM proves that nature is not deterministic. This view is wrong, but it explains his position. He also says:

“it is of fundamental importance to physics that we cannot speak precisely of position and momentum, but only position or momentum.”

I think we can speak about position AND momentum, no problem. We cannot determine position and momentum in a single measurement. There is no mystery here, the experimental setups are different. You cannot have a slit that is fixed and mobile at the same time. So we need to measure them one after another (how else?). But since each measurement disturbs the system (also true in classical physics as a consequence of Newton’s third law) you always have some error. This does not mean the particle does not have a position and momentum, only that you cannot measure them both with perfect accuracy. The consequence is that you cannot prepare a state where position and momentum are both certain, hence QM does not allow such a state in its formalism. Again, nothing deep or mysterious here.

But it’s entirely possible to know both the past position and momentum with any accuracy. You can switch on an electron gun for a very short time and detect the electron on a screen at some arbitrary distance. You note the time of emission and the time of arrival and you can calculate from there position AND momentum. Even Heisenberg said that “the uncertainty relation does not hold for the past”.

“he thinks of locality in terms of the possibility of superluminal signaling, not ontology. So no, he doesn’t have the same interpretation of EPR that you do.”

EPR does not depend on any interpretation. If the A measurement is random it has to cause B, otherwise the perfect correlation cannot be obtained. But if A and B are space-like, relativity says that A cannot cause B. So, it’s a sharp contradiction that does not go away by using a different definition of locality. You cannot get A to cause B in relativity by saying that you can’t send a message with it. One’s ability to send messages is irrelevant here.

On the problem of local observables, he says:

“If we are making a local measurement in a finite-sized room, at some large but finite size it becomes so heavy that it collapses the entire room into a black hole. This means that there is no way, not even in principle, to make perfectly accurate local measurements, and thus local observables cannot have a precise meaning.”

I still don’t understand what the existence of the local observables has to do with the size of the instrument. I don’t think this argument works. But sure, this paper is still not intended for professional physicists so maybe some more elaborate version of this argument exists somewhere.

“The challenge might be in getting all of the information about the measurement into one place where it can be used, but that’s just me speculating.”

In this case you will crate a black hole by simply writing an infinite number on paper or a SSD. So, even without quantum fluctuations you can’t do it. So, spacetime is doomed not because random noise but because the quantization of matter. You need an infinite amount of particles, so an infinite mass, to store an infinite number.

“In normal GR, just going past the event horizon with your measuring device doesn’t really help you, if the device collapsed first its light will never reach you no matter how deep you get.”

So, you need to jump first. See, problem solved 😊

“By the way, trying to perform a distance measurement with a precision higher than the Planck limit would still result in a black hole, because you’d need an object with a sufficiently small de Broglie wavelength to probe that difference”

I disagree. For the orbiting black holes you only need to measure their mass and orbital period. This does not involve energetic particles. The ellipse can then be calculated.

But I think a better example comes from LIGO. They can measure a distance of 10^-20 m using lasers with a wavelength of 10^-6 m. So, if this can be scaled down, you could probe Plank’s regime using light with a wavelength around 10^-19 nm. Such light has been detected:

https://en.wikipedia.org/wiki/Ultra-high-energy_gamma_ray

If Nima is right, those gamma photons should not interfere. I find this hard to believe.

LikeLike

Not interested in getting into the weeds on QM interpretations here, but keep in mind that there are in fact such people as, for example, QBists. Either way these questions don’t particularly matter for “is spacetime doomed”.

“No local observables in quantum gravity” is actually a bit of a different claim than the claims Nima is arguing with black holes. This is a bit hard to see from the text if you don’t know what you’re looking for beforehand, because “no local observables” isn’t actually something he justifies in the text, he just makes the statement:

“The problems with space-time are not only localized to small distances; in a precise sense, “inside” regions of space-time cannot appear in any fundamental description of physics at all.”

That’s because this is a claim with a technical justification that’s hard to express in popular language. For a technical discussion of it, see this stackexchange answer.

The claims Nima was gesturing at when he talked about black holes stopping you from measuring various things cash out in a few different ways. I already distinguished the story with a large measuring device from the story with high-energy probes. Seeing more of Nima’s argument, I think the reason he’s requiring a finite-sized room for the former story is locality. An infinite measuring device would also require an infinite time to make a measurement. At that point you’re not really talking about a “local” measurement, you need information about the whole length and breadth of space-time. “There are no local observables” still lets you measure “global” observables over all of space and time. I’m still not 100% sure this is what he’s gesturing at here, in QFT we don’t tend to do much with details of measuring devices in this kind of way so I’m not sure whether he’s summarizing a point that brings in logic from an adjacent subfield.

The latter story on a technical level amounts to the statement that gravity should have very different degrees of freedom at high energies/on small scales. Any question you ask about small scales is ultimately also a question about high energies, and gravity gets strong at high energies: you can no longer approximate it as nice little perturbations on top of a smooth metric, and black holes should make a lot of the questions you want to answer stop making sense. This gets linked to things like nonrenormalizability.

“Just jump in first” doesn’t do anything, remember, the black hole comes from you trying to probe the system with a high-energy particle, there’s nowhere to “jump” before you do your experiment.

Similarly, you can’t just “measure the mass and orbital period” because you still need comparable precision on those parameters. Remember, for these black holes to be distinct they have to be extremely small, otherwise they will be within each other’s event horizons and indistinguishable from a single larger black hole. This means they won’t exist for very long. (If you’re up for doing the math, it might be cute to check whether they’re even around for a full orbital period!) So you need a clock that fluctuates fast enough to distinguish, and thus something of very high energy.

LIGO is a more interesting thing to bring up, since indeed they’re able to wring a large number of orders of magnitude out of lower-frequency light. I have the impression this isn’t the kind of thing you can generalize to probe high-energy physics, but I don’t quite remember the argument and I’d have to look around to see how it works.

LikeLike

Regarding whether you could notice that “spacetime is doomed” with something like an interferometer, you might find this paper interesting. I suspect the content is still a bit controversial but it at least illustrates one way people are thinking about this.

LikeLike

To synthesize and elaborate parts of my last two replies (after reading more of the paper I linked in the second): the fact that there is a high-energy small-distance regime in which you see black holes means that in that regime space-time breaks down and we shouldn’t expect a fundamental theory that includes that regime to look like smooth space-time. It does not mean that every possible measurement with Planck length precision will show this behavior. An interferometric measurement might show something unusual, but if it did it would likely need to be because quantum gravity breaks Lorentz invariance in some fashion. That’s not a property of string theory: in string theory you could do that kind of interferometric experiment and not notice anything unusual. So I suspect Nima would expect that those kinds of interferometric experiments are unlikely to see any sign of “spacetime being doomed”, but regardless that doesn’t contradict the black hole argument: the point is that there are some regimes in which you need a theory that abandons space-time on some level in order to understand them.

LikeLike

Hopefully unrelated, something interesting in mathematical physics a friend sent me

https://www.quantamagazine.org/mathematicians-prove-2d-version-of-quantum-gravity-really-works-20210617/

Just add a few dimensions and this might be useful, right?

LikeLike

Heh, maybe!

I helped the author of that piece a bit in the early stages, when he was trying to figure out what all those different quantum field theorists were telling him. Looks like it turned out well!

LikeLike

All these arguments about planck sized black holes depend on the assumption that cosmic censorship applies to such scales. If such huge energy densities produce,instead, naked timelike singularities, then, that kind of arguments are invalid.

LikeLike

As I tried to clarify to Andrei, “spacetime is doomed” is really an argument about GR. If you’re replacing GR with something else with some other properties then you’ve likely already “doomed” spacetime in some sense.

LikeLike

“I suspect Nima would expect that those kinds of interferometric experiments are unlikely to see any sign of “spacetime being doomed”, but regardless that doesn’t contradict the black hole argument: the point is that there are some regimes in which you need a theory that abandons space-time on some level in order to understand them.”

Thanks for this clarification, it makes a lot of sense!

There is however another potential problem with this line of thought. As far as I know a black hole “evaporates”, and this process seems to preserve unitarity (black hole wars). So, even if a black hole forms in a Planck accelerator it is just a temporary state. It will decay and the outgoing particles can be observed. So, it seems that the formation of a black hole does not limit the ability of the experimenter to probe the sub-Planckian regime.

There is another question that bothers me. It’s unrelated to Nima’s arguments, but I think it fits with the spirit of this thread. It’s about the string-theoretical calculation of the vacuum energy, the one that gives 120 orders of magnitude (60 with supersymmetry) above the observed one.

What is the meaning of this value? Is this the energy one is expecting to find if a measurement is performed (as QM predicts in general) or it’s the energy that is “there” even if nobody is looking? If the former is true I see no problem for string theory because no such measurements are actually performed so there is nothing there to contribute to the cosmological constant. If it’s the later I would be curious to know how is this possible in QM. QM shouldn’t tell you what is there when nobody is looking, right? Or it is the observation of the cosmological constant itself a measurement?

Thanks!

LikeLike

Black hole evaporation doesn’t preserve unitarity, at least not judging by Hawking’s calculation alone. Many physicists expect that it should preserve unitarity in a full theory of quantum gravity, and how exactly that could happen/whether it actually does continues to be a hot topic of discussion (hence black hole wars). Suffice it to say that whatever new physics you invoke that lets you measure the details of the interior of a black hole, it probably “dooms spacetime” in some way or other.

The 120 orders of magnitude wrong calculation of the cosmological constant isn’t a string theory calculation, to be clear, it’s just an order-of-magnitude estimate using normal quantum field theory. (Also I’m not sure the 60 is with supersymmetry, glancing at Wikipedia it looks like that’s just a more modern version of the same calculation but I’m not 100% sure.)

Anyway, your last thought there is the right one: the cosmological constant is indeed an observable, we measure it when we measure that the universe’s expansion is accelerating. I think what’s confusing here is that people tend to describe this calculation as a calculation of the vacuum energy, which sounds like “energy nobody is measuring”. I have a post that explains this in some detail: basically, the calculation we do looks like a “nothing in, nothing out” process, but it’s standing in for an interaction with something else.

I also say a bit at the end of that post about why you might or might not trust that 120 orders of magnitude estimate. It’s very closely related to the “naturalness” argument, which I talk about here.

Finally, I suspect the reason you were thinking of this as a string theory calculation is that you’ve heard it presented in the context of the string theory landscape. In string theory, different configurations of the extra dimensions (in particular, the flux of energy flowing through them) give different values of the cosmological constant. There are many different stable configurations, so there are many different values that all seem consistent with string theory, including the observed one and the 120 orders-off one. (There’s also some controversy about whether this particular type of string theory calculation actually works, but that’s an entirely different rabbit-hole.)

LikeLiked by 1 person

“Black hole evaporation doesn’t preserve unitarity, at least not judging by Hawking’s calculation alone.”

It was my understanding that Susskind won the “war”, as Hawking himself accepted. Still, this seems to be an open question so Nima’s argument becomes even weaker, like “maybe you cannot see below the Planck limit so maybe spacetime is doomed”. Or maybe not.

Regarding the vacuum energy calculation you say:

“Normally in particle physics, we think about our particles in an empty, featureless space. We don’t have to, though. One thing we can do is introduce features in this space, like walls and mirrors, and try to see what effect they have. We call these features “defects”.

If there’s a defect like that, then it makes sense to calculate a one-point diagram, because your one particle can interact with something that’s not a particle: it can interact with the defect.”

My question here is if it is correct to extrapolate the energy calculated in the presence of those defects for a universe without those defects. This seems to me contrary to the way QM is used. One should not use a measurement result obtained in a certain experimental context for a different context. Clearly, the calculation applies for a universe full of those defects, but what if the energy is actually associated with those defects?

The paper “The Casimir Effect and the Quantum Vacuum” seems to agree with this point:

“I have presented an argument that the experimental confirmation of the Casimir effect does not establish the reality of zero point fluctuations. Casimir forces can be calculated without reference to the vacuum”

LikeLike

Hawking was eventually convinced not by being convinced there was an error in his own calculation, but by being convinced that in a sensible quantum gravity theory (Susskind would have been using string theory for this), that unitarity will be preserved. The message “spacetime is doomed” is popularization-speak for two claims: that one needs a proper theory of quantum gravity, and that such a theory will look very different from GR on small scales, to the point that describing things as “spacetime” on that level doesn’t really make sense. String theory is an example of a quantum gravity theory in which things look very different at small scales, in this way.

The point I was making in that post is that both defect one-point functions and vacuum diagrams are calculations you do not because there are actual defects/actual “vacuum energy”, but because they’re a good way to approximate interaction with something else, like a large near-classical object. The Casimir effect is an example of this: it’s a quantum electromagnetic interaction between the two plates. Calling it “vacuum energy” is in some sense just a reference to the approximation used, and in that sense I agree with that quote, indeed “Casimir forces can be calculated without reference to the vacuum”. (I don’t know if I’d agree with the rest of that paper, though, it depends on what they mean by that!)

LikeLike

“The point I was making in that post is that both defect one-point functions and vacuum diagrams are calculations you do not because there are actual defects/actual “vacuum energy”, but because they’re a good way to approximate interaction with something else, like a large near-classical object.”

OK, but why is this calculation relevant if there is no object around? Is there any justification for this? And if not isn’t the cosmological constant problem a false problem?

LikeLike

There is an object around though: galaxies are objects, for example, and we use their movement to measure the cosmological constant astronomically. The cosmological constant interacts gravitationally with all other matter. The picture with a vacuum diagram is a way to summarize interactions between many different sorts of macroscopic objects, so rather than calculating them one by one you just calculate the universal part that would be the same in each calculation.

LikeLike