I have been corresponding with Subir Sarkar, one of the authors of the paper I mentioned a few weeks ago arguing that the evidence for cosmic acceleration was much weaker than previously thought. He believes that the criticisms of Rubin and Hayden (linked to in my post) are deeply flawed. Since he and his coauthors haven’t responded publicly to Rubin and Hayden yet, they graciously let me post a summary of their objections.

Dear Matt,

This concerns the discussion on your blog of our recent paper showing that the evidence for cosmic acceleration from supernovae is only 3 sigma. Your obviously annoyed response is in fact to inflated headlines in the media about our work – our paper does just what it does on the can: “Marginal evidence for cosmic acceleration from Type Ia supernovae“. Nevertheless you make a fair assessment of the actual result in our paper and we are grateful for that.

However we feel you are not justified in going on further to state: “In the twenty years since it was discovered that the universe was accelerating, people have built that discovery into the standard model of cosmology. They’ve used that model to make other predictions, explaining a wide range of other observations. People have built on the discovery, and their success in doing so is its own kind of evidence”. If you were as expert in cosmology as you evidently are concerning amplitudes you would know that much of the reasoning you allude to is circular. There are also other instances (which we are looking into) of using statistical methods that assume the answer to shore up the ‘standard model’ of cosmology. Does it not worry you that the evidence from supernovae – which is widely believed to be compelling – turns out to be less so when examined closely? There is a danger of confirmation bias in that cosmologists making poor measurements with large systematic uncertainties nevertheless keep finding the ‘right answer’. See e.g. Croft & Dailey (http://adsabs.harvard.edu/cgi-bin/bib_query?arXiv:1112.3108) who noted “… of the 28 measurements of Omega_Lambda in our sample published since 2003, only 2 are more than 1 sigma from the WMAP results. Wider use of blind analyses in cosmology could help to avoid this”. Unfortunately the situation has not improved in subsequent years.

You are of course entitled to air your personal views on your blog. But please allow us to point out that you are being unfair to us by uncritically stating in the second part of your sentence: “EDIT: More arguments against the paper in question, pointing out that they made some fairly dodgy assumptions” in which you link to the arXiv eprint by Rubin & Hayden.

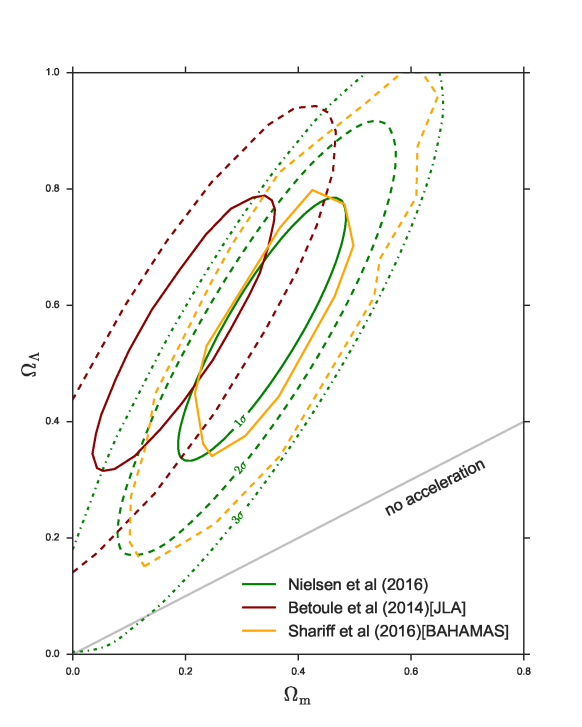

These authors make a claim similar to Riess & Scolnic (https://blogs.scientificamerican.com/guest-blog/no-astronomers-haven-t-decided-dark-energy-is-nonexistent/) that we “assume that the mean properties of supernovae from each of the samples used to measure the expansion history are the same, even though they have been shown to be different and past analyses have accounted for these differences”. In fact we are using exactly the same dataset (called JLA) as Adam Riess and co. have done in their own analysis (Betoule et al, http://adsabs.harvard.edu/cgi-bin/bib_query?arXiv:1401.4064). They found stronger evidence for acceleration because of using a flawed statistical method (“constrained \chi^2”). The reason why we find weaker evidence is that we use the Maximum Likelihood Estimator – it is not because of making “dodgy assumptions”. We show our results in the same \Omega_\Lambda – \Omega_m plane simply for ease of comparison with the previous result – as seen in the attached plot, the contours move to the right … and now enclose the “no acceleration” line within 3 \sigma. Our analysis is not – as Brian Schmidt tweeted – “at best unorthodox” … even if this too has been uncritically propagated on social media.

In fact the result from our (frequentist) statistical procedure has been confirmed by an independent analysis using a ‘Bayesian Hierarchical Model’ (Shariff et al, http://adsabs.harvard.edu/cgi-bin/bib_query?arXiv:1510.05954). This is a more sophisticated approach because it does not adopt a Gaussian approximation as we did for the distribution of the light curve parameters (x_1 and c), however their contours are more ragged because of numerical computation limitations.

Rubin & Hayden do not mention this paper (although bizarrely they ascribe to us the ‘Bayesian Hierarchical Model’). Nevertheless they find more-or-less the same result as us, namely 3.1 sigma evidence for acceleration, using the same dataset as we did (left panel of their Fig.2). They argue however that there are selection effects in this dataset – which have not already been corrected for by the JLA collaboration (which incidentally included Adam Riess, Saul Perlmutter and most other supernova experts in the world). To address this Rubin & Hayden introduce a redshift-dependent prior on the x_1 and c distributions. This increases the significance to 4.2 sigma (right panel of their Fig.2). If such a procedure is indeed valid then it does mark progress in the field, but that does not mean that these authors have “demonstrated errors in (our) analysis” as they state in their Abstract. Their result also begs the question why has the significance increased so little in going from the initial 50 supernovae which yielded 3.9 sigma evidence for acceleration (Riess et al, http://adsabs.harvard.edu/cgi-bin/bib_query?arXiv:astro-ph/9805201) to 740 supernovae in JLA? Maybe this is news … at least to anyone interested in cosmology and fundamental physics!

Rubin & Hayden also make the usual criticism that we have ignored evidence from other observations e.g. of baryon acoustic oscillations and the cosmic microwave background. We are of course very aware of these observations but as we say in the paper the interpretation of such data is very model-dependent. For example dark energy has no direct influence on the cosmic microwave background. What is deduced from the data is the spatial curvature (adopting the value of the locally measured Hubble expansion rate H_0) and the fractional matter content of the universe (assuming the primordial fluctuation spectrum to be a close-to-scale-invariant power law). Dark energy is then *assumed* to make up the rest (using the sum rule: 1 = \Omega_m + \Omega_\Lambda for a spatially flat universe as suggested by the data). This need not be correct however if there are in fact other terms that should be added to this sum rule (corresponding to corrections to the Friedman equation to account e.g. for averaging over inhomogeneities or for non-ideal gas behaviour of the matter content). It is important to emphasise that there is no convincing (i.e. >5 sigma) dynamical evidence for dark energy, e.g. the late integrated Sachs-Wolfe effect which induces subtle correlations between the CMB and large-scale structure. Rubin & Hayden even claim in their Abstract (v1) that “The combined analysis of modern cosmological experiments … indicate 75 sigma evidence for positive Omega_\Lambda” – which is surely a joke! Nevertheless this is being faithfully repeated on newsgroups, presumably by those somewhat challenged in their grasp of basic statistics.

Apologies for the long post but we would like to explain that the technical criticism of our work by Rubin & Hayden and by Riess & Scolnic is rather disingenuous and it is easy to be misled if you are not an expert. You are entitled to rail against the standards of science journalism but please do not taint us by association.

As a last comment, surely we all want to make progress in cosmology but this will be hard if cosmologists are so keen to cling on to their ‘standard model’ instead of subjecting it to critical tests (as particle physicists continually do to their Standard Model). Moreover the fundamental assumptions of the cosmological model (homogeneity, ideal fluids) have not been tested rigorously (unlike the Standard Model which has been tested at the level of quantum corrections). This is all the more important in cosmology because there is simply no physical explanation for why \Lambda should be of order H_0^2.

Best regards,

Jeppe Trøst Nielsen, Alberto Guffanti and Subir Sarkar

On an unrelated note, Perimeter’s PSI program is now accepting applications for 2017. It’s something I wish I knew about when I was an undergrad, for those interested in theoretical physics it can be an enormous jump-start to your career. Here’s their blurb:

Perimeter Scholars International (PSI) is now accepting applications for Perimeter Institute for Theoretical Physics’ unique 10-month Master’s program.

Features of the program include:

- All student costs (tuition and living) are covered, removing financial and/or geographical barriers to entry

- Students learn from world-leading theoretical physicists – resident Perimeter researchers and visiting scientists – within the inspiring environment of Perimeter Institute

- Collaboration is valued over competition; deep understanding and creativity are valued over rote learning and examination

- PSI recruits worldwide: 85 percent of students come from outside of Canada

- PSI takes calculated risks, seeking extraordinary talent who may have non-traditional academic backgrounds but have demonstrated exceptional scientific aptitude

Apply online at http://perimeterinstitute.ca/apply.

Applications are due by February 1, 2017.

Whoa! The first time around I did wonder about that “people have built that discovery” assertion. I don’t know nearly enough to challenge it, but it did strike me that there might be circular reasoning involved. Everyone just seems to assume on some gut level that Dark Energy and Dark Matter (and gravitons! 😀 ) really must exist. I’m very interested in what challenges to the very idea of DE and DM turn up!

Going through old magazines, I found a SciAm from 2002 with an article by Milgrom about his MOND theory. Recent fails to find DM particles, and, from what I’ve heard, closing windows on black hole sizes, have people forced back to modified gravity theories.

And now we’re even questioning DE! Fascinating! I never liked the idea. 🙂

LikeLike

Hi – I was just pointed to this blog. Thanks for posting this response. I am one of the authors of the piece in Scientific American – https://blogs.scientificamerican.com/guest-blog/no-astronomers-haven-t-decided-dark-energy-is-nonexistent/ . I don’t think our piece, or that of Rubin and Hayden, is disingenuous in any way and I don’t know of a single person in the field besides those who wrote this post who think we are disingenuous. The technical mistake that both we and Rubin+Hayden point out is quite obvious to anyone who reads it. Figuring out that we shouldn’t fix these distributions does not mark “progress in the field” – in fact there have been many papers before this one that say and show clearly why one shouldn’t do it (two of my own for reference – https://arxiv.org/abs/1603.01559 or https://arxiv.org/abs/1310.3824 ).

One other point that the author brings up is why things haven’t improved more from the original discovery paper to the current analyses with hundreds more supernovae. Well, they have. This post confuses that in 1998, Riess. et al. integrated the likelihood space for Omega_L>0 or for q_0<0 and the integral was 99.7% which was called 3 sigma for MLCS. This is not the same thing as asking how far the best fit is from 0.0,0.0. So this post is comparing apples to oranges.

I don’t think there is some giant conspiracy going on here. I’m more surprised that the authors of this post do not regret that the press has turned paper that states there is 3sigma evidence for an accelerating universe into claims of the universe not accelerating.

LikeLike

To imply that others are proposing a giant conspiracy, is a common way of disparaging those others. By your assuming that I will swallow this is insulting and I do not appreciate that. No, it is not a giant conspiracy, but it is a sickness in today’s science that I have seen several times in my career. The fanatical desire to have an unexpected, splashy discovery with the attention and prizes that brings, causes people to push their analyses, their claimed results, too far. Then others come along and want to be a part of it all. There is no need for a conspiracy, it just happens. Example, BICEP.

LikeLike

Dear Dan, you suggested in your widely reproduced guest blog in Scientific American (which did not however offer us the same right to respond as you are utililising here) that our analysis is naive. Apparently we get a different result because of not taking into account the differences beween “the mean properties of supernovae from each of the samples used”. You are even more explicit now in asserting that we made a “technical mistake”.

The disingenuity lies in your saying that “… past analyses have accounted for these differences” – implying that this is the reason for the shift of our result from that of Betoule et al (2014). As the plot above illustrates, Shariff et al (2016) get the same result as us. It is in fact because we use the industry-standard maximum likelihood estimator, whereas Betoule et al (and others before them) have added an arbitrary error bar to each point and adjusted it to get a \chi^2/d.o.f. of 1 for the fit to the desired answer. It is shocking that this is standard practice in this field.

Rubin & Hayden are equally disingenuous in stating that it is our “model of the x1 and c distributions” that “drives the difference with the JLA analysis” so our analysis is apparently “both incorrect in its method and unreasonable in its assumptions”. David Rubin (with who my coauthor Jeppe has had constructive exchanges) should know what the real story is.

The point is that this is no longer just a game for supernova astronomers to play … using jargon and technicalities that are not clear to others. The implications of cosmic acceleration are so profound that their claim will come under increasing critical scrutiny and be held to the same standards as for any other discovery of such fundamental significance. We are happy to have started this debate for the benefit of fellow physicists even if sections of the press have gone overboard in reporting our result (should we really “regret” that the Daily Mail reported: “The universe might NOT be expanding”?)! It is for physicists to decide whether a 3 (or even 4) sigma result consitutes discovery of the accelerating expansion of the universe through observations of distant supernovae. And hopefully other observations which are said to have provided independent confirmation will be equally closely examined. Do we not all want to know the truth?

Best wishes for your work.

LikeLike

Whether we are at 2,3 or even 5 sigma is not all that convincing to me as there are too many systematic uncertainties. As Saul Perlmutter states in the ‘Status of the Cosmological Parameters’

“However, if you prefer to take a more agnostic view and consider the flatness

of the universe to be still unproven, then we must ask if it is possible that we live off of the flat universe line in a universe with low mass density and no cosmo- logical constant. This case is still ruled out at the 99 percent level statistically, but now it would only take a 15 to 20 percent systematic error to make the data consistent with this open universe. We have spent most of our effort in the last year or so looking for such systematics. Possible candidates include absorption of light by dust, supernova evolution, selection bias in the supernova detection, and gravitational lensing effects. ”

99% is 3 sigma. One might consider this observation as now old – as we have much more data, but I’m waiting for better results, like the Webb Telescope which will hit z = 25.

LikeLike

Dan,

Do you interact with the students of PSI? I’ll be there next August and I’d be nice to speak with you. You have a great blog.

LikeLike

I think you’re confusing two different people. Dan is at KICP, at U Chicago. I’m the one at Perimeter.

LikeLike

You’re right! Sorry for that, Matt

LikeLike

If you were asking me, I do interact with the PSI students a fair amount, but this is my last year here, so I probably won’t be around by the time you are.

LikeLike

One technical point – the ‘Bayesian Hierarchical Model’ (Shariff et al, http://adsabs.harvard.edu/cgi-bin/bib_query?arXiv:1510.05954) also assume a single gaussian distribution for both color and stretch for the whole sample – see Table 1. I have confirmed this with the authors, and we are working together to include selection effects and evolution and other aspects for their model. So I would not state this is an independent confirmation. One thing I am doing with those authors that I would happy to do for anyone is generate realistic simulations of supernova surveys for you to test your code on (like we do here: https://arxiv.org/abs/1610.04677). These simulations accurately reproduce selection effects, survey properties (like depth, cadence, weather, seeing..) and have been used for all the recent cosmology analyses like Betoule et al. Anyway, I’d be happy to send off any simulated survey to test, just let me know!

LikeLike