(My last post had a poll in it! If you haven’t responded yet, please do.)

Earlier this month, philosopher Richard Dawid ran a workshop entitled “Why Trust a Theory? Reconsidering Scientific Methodology in Light of Modern Physics” to discuss his idea of “non-empirical theory confirmation” for string theory, inflation, and the multiverse. They haven’t published the talks online yet, so I’m stuck reading coverage, mostly these posts by skeptical philosopher Massimo Pigliucci. I find the overall concept annoying, and may rant about it later. For now though, I’d like to talk about a talks on the second day by philosopher Chris Wüthrich about black hole entropy.

Black holes, of course, are the entire-stars-collapsed-to-a-point-that-no-light-can-escape that everyone knows and loves. Entropy is often thought of as the scientific term for chaos and disorder, the universe’s long slide towards dissolution. In reality, it’s a bit more complicated than that.

Can black holes be disordered? Naively, that doesn’t seem possible. How can a single point be disorderly?

Thought about in a bit more detail, the conclusion seems even stronger. Via something called the “No Hair Theorem”, it’s possible to prove that black holes can be described completely with just three numbers: their mass, their charge, and how fast they are spinning. With just three numbers, how can there be room for chaos?

On the other hand, you may have heard of the Second Law of Thermodynamics. The Second Law states that entropy always increases. Absent external support, things will always slide towards disorder eventually.

If you combine this with black holes, then this seems to have weird implications. In particular, what happens when something disordered falls into a black hole? Does the disorder just “go away”? Doesn’t that violate the Second Law?

This line of reasoning has led to the idea that black holes have entropy after all. It led Bekenstein to calculate the entropy of a black hole based on how much information is “hidden” inside, and Hawking to find that black holes in a quantum world should radiate as if they had a temperature consistent with that entropy. One of the biggest successes of string theory is an explanation for this entropy. In string theory, black holes aren’t perfect points: they have structure, arrangements of strings and higher dimensional membranes, and this structure can be disordered in a way that seems to give the right entropy.

Note that none of this has been tested experimentally. Hawking radiation, if it exists, is very faint: not the sort of thing we could detect with a telescope. Wüthrich is worried that Bekenstein’s original calculation of black hole entropy might have been on the wrong track, which would undermine one of string theory’s most well-known accomplishments.

I don’t know Wüthrich’s full argument, since the talks haven’t been posted online yet. All I know is Pigliucci’s summary. From that summary, it looks like Wüthrich’s primary worry is about two different definitions of entropy.

See, when I described entropy as “disorder”, I was being a bit vague. There are actually two different definitions of entropy. The older one, Gibbs entropy, grows with the number of states of a system. What does that have to do with disorder?

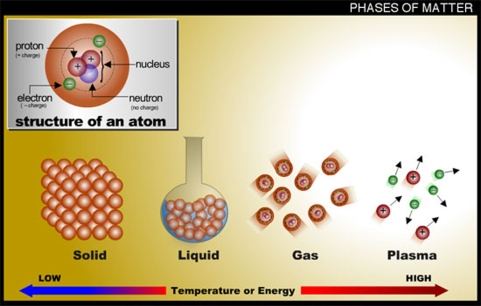

Think about two different substances: a gas, and a crystal. Both are made out of atoms, but the patterns involved are different. In the gas, atoms are free to move, while in the crystal they’re (comparatively) fixed in place.

There are many different ways the atoms of a gas can be arranged and still be a gas, but fewer in which they can be a crystal, so a gas has more entropy than a crystal. Intuitively, the gas is more disordered.

When Bekenstein calculated the entropy of a black hole he didn’t use Gibbs entropy, though. Instead, he used Shannon entropy, a concept from information theory. Shannon entropy measures the amount of information in a message, with a formula very similar to that of Gibbs entropy: the more different ways you can arrange something, the more information you can use it to send. Bekenstein used this formula to calculate the amount of information that gets hidden from us when something falls into a black hole.

Wüthrich’s worry here (again, as far as Pigliucci describes) is that Shannon entropy is a very different concept from Gibbs entropy. Shannon entropy measures information, while Gibbs entropy is something “physical”. So by using one to predict the other, are predictions about black hole entropy just confused?

It may well be he has a deeper argument for this, one that wasn’t covered in the summary. But if this is accurate, Wüthrich is missing something fundamental. Shannon entropy and Gibbs entropy aren’t two different concepts. Rather, they’re both ways of describing a core idea: entropy is a measure of ignorance.

A gas has more entropy than a crystal, it can be arranged in a larger number of different ways. But let’s not talk about a gas. Let’s talk about a specific arrangement of atoms: one is flying up, one to the left, one to the right, and so on. Space them apart, but be very specific about how they are arranged. This arrangement could well be a gas, but now it’s a specific gas. And because we’re this specific, there are now many fewer states the gas can be in, so this (specific) gas has less entropy!

Now of course, this is a very silly way to describe a gas. In general, we don’t know what every single atom of a gas is doing, that’s why we call it a gas in the first place. But it’s that lack of knowledge that we call entropy. Entropy isn’t just something out there in the world, it’s a feature of our descriptions…but one that, nonetheless, has important physical consequences. The Second Law still holds: the world goes from lower entropy to higher entropy. And while that may seem strange, it’s actually quite logical: the things that we describe in more vague terms should become more common than the things we describe in specific terms, after all there are many more of them!

Entropy isn’t the only thing like this. In the past, I’ve bemoaned the difficulty of describing the concept of gauge symmetry. Gauge symmetry is in some ways just part of our descriptions: we prefer to describe fundamental forces in a particular way, and that description has redundant parameters. We have to make those redundant parameters “go away” somehow, and that leads to non-existent particles called “ghosts”. However, gauge symmetry also has physical consequences: it was how people first knew that there had to be a Higgs boson, long before it was discovered. And while it might seem weird to think that a redundancy could imply something as physical as the Higgs, the success of the concept of entropy should make this much less surprising. Much of what we do in physics is reasoning about different descriptions, different ways of dividing up the world, and then figuring out the consequences of those descriptions. Entropy is ignorance…and if our ignorance obeys laws, if it’s describable mathematically, then it’s as physical as anything else.

yes! Gibbs entropy and Shannon entropy are actually two sides of the same coin.

The problem is that entropy is usually presented as something intrinsic, like energy. Actually, as you say, entropy is a measure of our lack of knowledge. It measures how many states a system might be in, subject to some measured constraints. We can’t measure the microscopic details of macroscopic systems, rather we measure averages – the average density, average temperature, etc.

Recent I came across a brilliant description of the subjective nature of entropy. It has to do with the Gibbs paradox. The discussion on pg 7 is particularly illuminating.

Click to access gibbs.paradox.pdf

To boil it all down to a pithy 3 liner, another way of stating this is that entropy decreases useful work. But ‘useful work’ is itself subjective, it has to do with how well we can control the system. Control is predicated how much information we have about the system.

LikeLiked by 1 person

by “average temperature” I meant “average energy”

LikeLike

You lost me on that last line. That something obeys laws and is mathematically describable does not make it physical. To me, that’s a logic error called “putting the cart before the horse.” Things that are physical obey laws that we seek to understand and describe mathematically. Further, we can conceive of any number of things, the number of stars in the sky, say. We can measure this, and predict all kinds of things from it, and while there is a set of all stars, there is not an object called “total star count”.

LikeLike

The line was a bit badly phrased, but there is a point I’m trying to make there. Everything is theory-mediated. “Stars” aren’t just brute physical things in the sky, they’re a particular way we cordon off our observations: the entropy of a star is very different from the entropy of the specific arrangement of particles within a star at one particular moment. That’s what I meant by “as physical as anything else”: not that everything lawlike is physical, but that most of what we reason about in physics is “as unphysical” as entropy.

LikeLike

It is still need to intercede about Plato:

1. Ideas are the product of physical activity of the human brain so that they are also a particular configuration of matter in the universe.

2. Some things exist independently of the specific embodiments, the golden section number “e”, for example.

Apart from the ideas of Platonism in psychology there are archetypes, and for them is relatively not difficult to draw a serious physical base ( dx.doi.org/10.13140/RG.2.1.1826.6086 ).

LikeLike

I’m approving this because it’s loosely on-topic, but it was close. Be aware that I don’t allow comments that are merely link-spam, you do have to actually engage with the post/other commenters.

LikeLike

The name is “Bekenstein”.

LikeLike

Thanks, fixed!

LikeLike

“There is still no rigorous mathematical proof of the no-hair theorem.” – wikipedia.

On top of that the theorem only applies to a ‘flat background’ situation, which physics shows is not the case. In other words the noisy environment that any physical black hole lives will have hair a plenty and furthermore may not even house a singularity.

Brandon Carter: (Kerr solution)

“Thus we reach the conclusion that a timelike or null geodesic or orbit cannot reach the singularity under any circumstances except in the case where it is confined to the equator, cos(theta) = 0…..Thus as symmetry is progressively reduced, starting from the Schwarchild solution, the extent of the class of geodesics reaching the singularity is steadily reduced likewise, … which suggests that after further reduction in symmetry, incomplete geodesics may cease to exist altogether”

LikeLike

This is indeed true. The no hair theorem tends to be a good lies-to-children version of the general point that it’s hard to take into account the degrees of freedom a black hole ought to have classically.

LikeLike